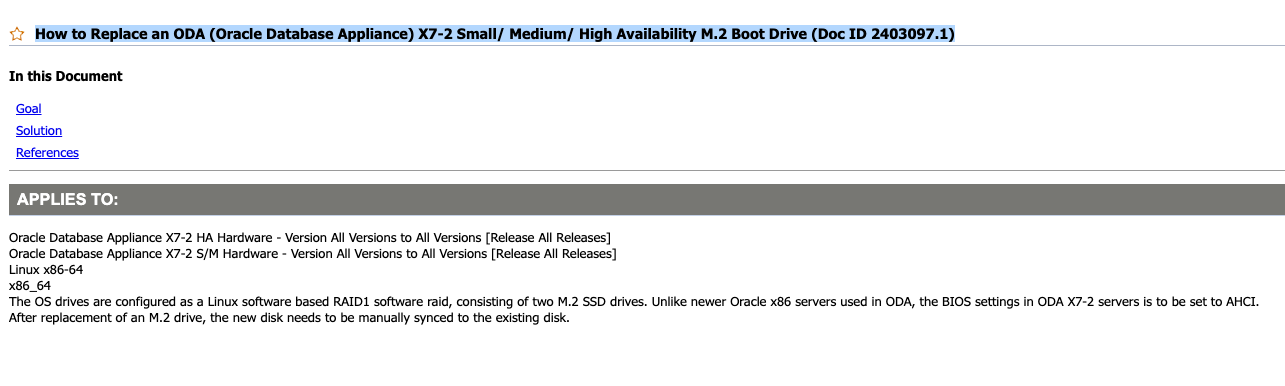

Oracle Database Appliance X7-2 HA Hardware - Version All Versions to All Versions [Release All Releases] Oracle Database Appliance X7-2 S/M Hardware - Version All Versions to All Versions [Release All Releases] Linux x86-64 x86_64 The OS drives are configured as a Linux software based RAID1 software raid, consisting of two M.2 SSD drives. Unlike newer Oracle x86 servers used in ODA, the BIOS settings in ODA X7-2 servers is to be set to AHCI. After replacement of an M.2 drive, the new disk needs to be manually synced to the existing disk.

Goal

Describe the procedure needed to replace an M.2 boot drive in a server node of an Oracle Database Appliance X7-2.

Solution

DISPATCH INSTRUCTIONS

- WHAT SKILLS DOES THE FIELD ENGINEER/ADMINISTRATOR NEED?

Training and experience with Oracle Database Appliance systems

TIME ESTIMATE: 30 minutes

TASK COMPLEXITY: 3

FIELD ENGINEER/ADMINISTRATOR INSTRUCTIONS:

PROBLEM OVERVIEW: There are two OS boot drives in each server node of the Oracle Database Appliance X7-2. This document describes how to replace one of these drives.

WHAT STATE SHOULD THE SYSTEM BE IN TO BE READY TO PERFORM THE RESOLUTION ACTIVITY?:

The OS boot drives are NOT hot-swappable, the system must come down before you can safely remove the M.2 SSD drive.

WHAT ACTION DOES THE FIELD ENGINEER/ADMINISTRATOR NEED TO TAKE?:

1. Determine which disk drive needs to be replaced.

The faulted device should already be determined. In this case, I will assume that /dev/sdb has faulted.

# cat /proc/mdstat

Personalities : [raid1]

md0 : active raid1 sda2[0] sdb2[1](F)

511936 blocks super 1.0 [2/1] [U_]

md1 : active raid1 sdb3[1](F) sda3[0]

467694592 blocks super 1.1 [2/1] [U_]

bitmap: 2/4 pages [8KB], 65536KB chunk

unused devices: <none>

Note* All commands are run from DOM0 of a Virtualized platform.

# smartctl -a /dev/sdb

smartctl 5.43 2016-09-28 r4347 [x86_64-linux-4.1.12-112.16.8.el6uek.x86_64] (local build)

Copyright (C) 2002-12 , http://smartmontools.sourceforge.net

=== START OF INFORMATION SECTION ===

Device Model: INTEL SSDSCKJB480G7

**Serial Number: PHDW7320015T480B <<<<<<<<<<<<<<<<<<<<<<< this is written on SSD, for visual identification**

LU WWN Device Id: 5 5cd2e4 14e1fc52f

Firmware Version: N2010112

User Capacity: 480,103,981,056 bytes [480 GB]

Sector Sizes: 512 bytes logical, 4096 bytes physical

Device is: Not in smartctl database [for details use: -P showall]

ATA Version is: 8

ATA Standard is: ACS-3 (unknown minor revision code: 0x006d)

Local Time is: Thu May 24 10:41:16 2018 MDT

SMART support is: Available - device has SMART capability.

SMART support is: Enabled

2. Prepare the server for service.

Power off the server and disconnect the power cords from the power supplies. Extend the server to the maintenance position in the rack. Attach an anti-static wrist strap. Remove the top cover.

3. Locate and Remove the failed M.2 Solid State Drive from the PCEe riser for PCIe slots 3/4.

NOTE: For a document that includes a video of changing the hardware, see

4. Return the Server to operation

Replace the top cover Remove any anti-static measures that were used. Return the server to it's normal operating position within the rack.** **Re-install the AC power cords and any data cables that were removed but not yet replaced.

5. Login to the ILOM and check to see if any faults were logged against the PCIe slot. If so, clear the fault by logging into the fault management shell.

> start /SP/faultmgmt/shell

Are you sure you want to start /SP/faultmgmt/shell (y/n)? y

faultmgmtsp> fmadm faulty

...

FRU : /SYS/MB/PCIE3

...

faultmgmtsp> fmadm repaired /SYS/MB/PCIE3

faultmgmtsp> fmadm faulty -a

No faults found

faultmgmtsp> exit

Power on server. Verify that the Power/OK indicator led lights steady on.

6. Sync the new disk with the old disk

If you are running ODA version 12.2.1.3.0 or below, you will need to install the manually on the node.

If you are running Virtualized platform, the gdisk package is already included, there is no need to re-install this rpm.

# rpm -ivh gdisk-0.8.10-1.el6.x86_64.rpm

warning: gdisk-0.8.10-1.el6.x86_64.rpm: Header V3 RSA/SHA256 Signature, key ID ec551f03: NOKEY

Preparing... ########################################### [100%]

1:gdisk ########################################### [100%]

As you can see from the following command, there is only one disk in the raid1.

# cat /proc/mdstat Personalities : [raid1] md0 : active raid1 sda2[0] <<<<<<<<<<<<<<<<<<< only sda 511936 blocks super 1.0 [2/1] [U_]

md1 : active raid1 sda3[0] 467694592 blocks super 1.1 [2/1] [U_] bitmap: 2/4 pages [8KB], 65536KB chunk

This is example from a Virtualized platform, where /dev/sda has been replaced.

# cat /proc/mdstat

Personalities : [raid1]

md3 : active raid1 sdb5[0]

443118592 blocks super 1.1 [2/1] [U_]

bitmap: 3/4 pages [12KB], 65536KB chunk

md0 : active raid1 sdb4[1]

511936 blocks super 1.0 [2/1] [_U]

md1 : active raid1 sdb2[0]

20463616 blocks super 1.1 [2/1] [U_]

bitmap: 1/1 pages [4KB], 65536KB chunk

md2 : active raid1 sdb3[0] sda3[1]

4093952 blocks super 1.1 [2/2] [UU]

unused devices: <none>

To find the new device name of the new disk, use lsscsi or odaadmcli. In some cases, the new disk device name may change, so it's important to check.

# odaadmcli show disk -local

/dev/sda ahci:00:17.0 1

/dev/sdb ahci:00:17.0 2

For systems running Virtualized platform, use lsscsi from DOM0.

# lsscsi |grep INTEL

[1:0:0:0] disk ATA INTEL SSDSCKJB48 0112 /dev/sda

[2:0:0:0] disk ATA INTEL SSDSCKJB48 0112 /dev/sdb

Prepare new disk to sync up the new disk with the old disk.

Randomize the GUID on the new drive.

#sgdisk /dev/sdb -G

Prepare new disk to sync up the new disk with the old disk. First, backup the GUID partition table on the good drive.

Backup the GUID partition table from the good drive. In case of corruption we can restore with the backup file.

# sgdisk -b sda_partition_backup /dev/sda

The operation has completed successfully.

Restore the good drive's partition table to the new drive.

# sgdisk -l sda_partition_backup /dev/sdb

Warning: The kernel is still using the old partition table.

The new table will be used at the next reboot.

The operation has completed successfully

Verify the partition table is matching with the other disk and ensure the GUID on both disks are unique.

# sgdisk -p /dev/sda

Disk /dev/sda: 937703088 sectors, 447.1 GiB

Logical sector size: 512 bytes

Disk identifier (GUID): 88BFF7AB-3DD4-43FA-834D-1F5877FA3C11 <=== GUID

Partition table holds up to 128 entries

First usable sector is 34, last usable sector is 937703054

Partitions will be aligned on 2048-sector boundaries

Total free space is 3693 sectors (1.8 MiB)

Number Start (sector) End (sector) Size Code Name

1 2048 1026047 500.0 MiB 0700

2 1026048 2050047 500.0 MiB FD00

3 2050048 937701375 446.2 GiB FD00

# sgdisk -p /dev/sdb

Disk /dev/sdb: 937703088 sectors, 447.1 GiB

Logical sector size: 512 bytes

Disk identifier (GUID): DF95583E-DC68-4B3A-853E-26045B1308B8 <== GUID

Partition table holds up to 128 entries

First usable sector is 34, last usable sector is 937703054

Partitions will be aligned on 2048-sector boundaries

Total free space is 3693 sectors (1.8 MiB)

Number Start (sector) End (sector) Size Code Name

1 2048 1026047 500.0 MiB 0700

2 1026048 2050047 500.0 MiB FD00

3 2050048 937701375 446.2 GiB FD00

Clone the ESP from the healthy drive.

# dd if=/dev/sda1 of=/dev/sdb1

1024000+0 records in

1024000+0 records out

524288000 bytes (524 MB) copied, 8.55395 s, 61.3 MB/s

If this is a HA system, you will need to add the scsi_id of the new drive to /etc/multipath.conf (This is not needed on a Virtualized platform)

Find the scsi_id of new drive.

# scsi_id -g -u /dev/sdb

355cd2e414e218f8e <<<<<<<<<<<<<<<< scsi id of new disk

Add the new scsi_id to /etc/multipath.conf

# vi /etc/multipath.conf

#Multipath File autogenerate by Comet StorageManagement--DO NOT REMOVE THIS LINE

blacklist {

devnode "^asm/*"

devnode "ofsctl"

devnode "xvd*"

wwid 355cd2e414e2264b1

wwid 355cd2e414e218f8e <<<<<<<<<<<< add scsi id of new disk

Restart the multipath service.

# service multipathd reload

Reloading multipathd: [ OK ]

Or if you are running a higher ODA software version with OL7

# systemctl restart multipathd

7. Sync the md partitions.

# cat /proc/mdstat

Personalities : [raid1]

md0 : active raid1 sda2[0]

511936 blocks super 1.0 [2/1] [U_]

md1 : active raid1 sda3[0]

467694592 blocks super 1.1 [2/1] [U_]

bitmap: 2/4 pages [8KB], 65536KB chunk

unused devices: <none>

For Baremetal Platform :

# mdadm -a /dev/md0 /dev/sdb2

mdadm: added /dev/sdb2

# mdadm -a /dev/md1 /dev/sdb3

mdadm: re-added /dev/sdb3

Here is an example from a Virtualized platform:

# mdadm -a /dev/md3 /dev/sda5

mdadm: re-added /dev/sda5

# mdadm -a /dev/md0 /dev/sda4

mdadm: added /dev/sda4

# mdadm -a /dev/md1 /dev/sda2

mdadm: re-added /dev/sda2

Check status of sync

# cat /proc/mdstat

Personalities : [raid1]

md0 : active raid1 sdb2[2] sda2[0]

511936 blocks super 1.0 [2/2] [UU]

md1 : active raid1 sdb3[1] sda3[0]

467694592 blocks super 1.1 [2/1] [U_]

[=>...................] recovery = 9.1% (42958080/467694592) finish=35.3min speed=200163K/sec

bitmap: 2/4 pages [8KB], 65536KB chunk

unused devices: <none>

If this is a HA system, you will need to add the wwid of the new drive to /etc/multipath.conf (This is not needed on a Virtualized platform or Small/Medium systems).

Find the wwid of new drive.

In Linux 7 and above, use /usr/lib/udev/ata_id command, otherwise, use /lib/udev/ata_id to retrieve the wwid of the new M.2 disk.

# /lib/udev/ata_id /dev/sda

INTEL_SSDSCKJB480G7_PHDW734004AU480B

# /usr/lib/udev/ata_id /dev/sda

INTEL_SSDSCKKB480G8_PHYH920500UW480K

Add the new wwid to /etc/multipath.conf

# vi /etc/multipath.conf

#Multipath File autogenerate by Comet StorageManagement--DO NOT REMOVE THIS LINE

blacklist {

devnode "^asm/*"

devnode "ofsctl"

devnode "xvd*"

wwid 355cd2e414e2264b1

wwid INTEL_SSDSCKKB480G8_PHYH920500UW480K <<<<<<<<<<<< add wwid of new disk

Restart the multipath service.

# service multipathd reload

Reloading multipathd: [ OK ]

# systemctl restart multipathd

Sync the md partitions. This is the same for Bare Metal and Virtualized, make sure you are in DOM0 if Virtualized environment.

# mdadm -a /dev/md0 /dev/sdb

mdadm: added /dev/sdb

Check on status of the sync

# cat /proc/mdstat

**Personalities : [raid1]

md126 : active raid1 sdb[2] sda[0]

468847616 blocks super external:/md0/0 [2/1] [_U]

[====>................] recovery = 20.1% (94629504/468848384) finish=31.1min speed=200060K/sec

md0 : inactive sdb[1](S) sda[0](S)

6306 blocks super external:imsm

unused devices: <none>

For a Small/Medium system, you will need to add sda back into the md device

# mdadm -a /dev/md127 /dev/sda

mdadm: added /dev/sda

Check on status of the sync

# cat /proc/mdstat

Personalities : [raid1]

md126 : active raid1 sda[2] sdb[1]

468845568 blocks super external:/md127/0 [2/1] [U_]

[>....................] recovery = 0.2% (1201536/468845568) finish=32.4min speed=240307K/sec

md127 : inactive sda[1](S) sdb[0](S)

6306 blocks super external:imsm

unused devices: <none>

8. Confirm boot order is correct

Add the replaced disk to UEFI boot entry, use the appropriate label:

For Baremetal platform, use "Oracle DB Appliance" for /dev/sda, use "ODA Backup EFI" for /dev/sdb.

# efibootmgr -c -g -d /dev/sdb -p 1 -L "ODA Backup EFI" -l '\EFI\redhat\grubx64.efi'

# efibootmgr -c -g -d /dev/sda -p 1 -L "Oracle DB Appliance" -l '\EFI\redhat\grubx64.efi'

For Virtualized platform, use "Oracle VM server" for /dev/sda and "OVM Backup EFI" for /dev/sdb.

# efibootmgr -c -g -d /dev/sdb -p 1 -L "OVM Backup EFI" -l '\EFI\redhat\grubx64.efi'

# efibootmgr -c -g -d /dev/sda -p 1 -L "Oracle VM Server" -l '\EFI\redhat\grubx64.efi'

Delete all the duplicate boot entries

# efibootmgr -b

Use efibootmgr to change the boot order

# efibootmgr -o 0000,0004,0001,0002,0003

BootCurrent: 0000

Timeout: 1 seconds

BootOrder: 0000,0004,0001,0002,0003

Boot0000* Oracle DB Appliance

Boot0001* NET0:PXE IP4 Intel(R) I210 Gigabit Network Connection

Boot0002* NET1:PXE IP4 Oracle Dual Port 10GBase-T Ethernet Controller

Boot0003* NET2:PXE IP4 Oracle Dual Port 10GBase-T Ethernet Controller

Boot0004* ODA Backup EFI

Example: Virtualized platform.

# efibootmgr

BootCurrent: 0001

Timeout: 1 seconds

BootOrder: 0009,0001,0005,0006,0007,0008,0004,000A

Boot0001* OVM Backup EFI

Boot0004* NET0:PXE IP4 Intel(R) I210 Gigabit Network Connection

Boot0005* NET1:PXE IP4 Oracle Dual Port 10Gb/25Gb SFP28 Ethernet Controller

Boot0006* NET2:PXE IP4 Oracle Dual Port 10Gb/25Gb SFP28 Ethernet Controller

Boot0007* PCIE1:PXE IP4 Oracle Dual Port 25Gb Ethernet Adapter

Boot0008* PCIE1:PXE IP4 Oracle Dual Port 25Gb Ethernet Adapter

Boot0009* Oracle VM Server

Boot000A* USB:SUN

9. Reboot